Obstacle Avoidance

Safe autonomous navigation of ground robots using depth sensing.

Overview

Obstacle avoidance is a core capability for autonomous robots operating in real-world environments. Applications range from hospital robots navigating crowded hallways, to indoor delivery and cleaning systems, to autonomous vehicles and search-and-rescue platforms operating in hazardous conditions.

This project focuses on developing and evaluating depth-camera–based obstacle avoidance algorithms for ground robots. Our goal was to explore how different perception and planning pipelines affect safety and performance, and to understand the tradeoffs between local reactive methods and global planning approaches.

Problem Setup

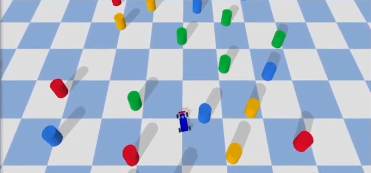

The robot operates in a simulated environment built using PyBullet, consisting of:

- An empty plane

- Randomly generated cylindrical obstacles

The robot follows a standard Ackermann drive model, parameterized by:

- Position and orientation

- Steering angle

- Linear velocity

A forward-facing depth camera provides distance measurements to obstacles directly ahead. The objective is to navigate safely around obstacles while maintaining a reasonable speed.

Performance is evaluated using:

- Average distance traveled without collision

- Average speed

Method 1: Planning-Based Obstacle Avoidance

Perception Pipeline

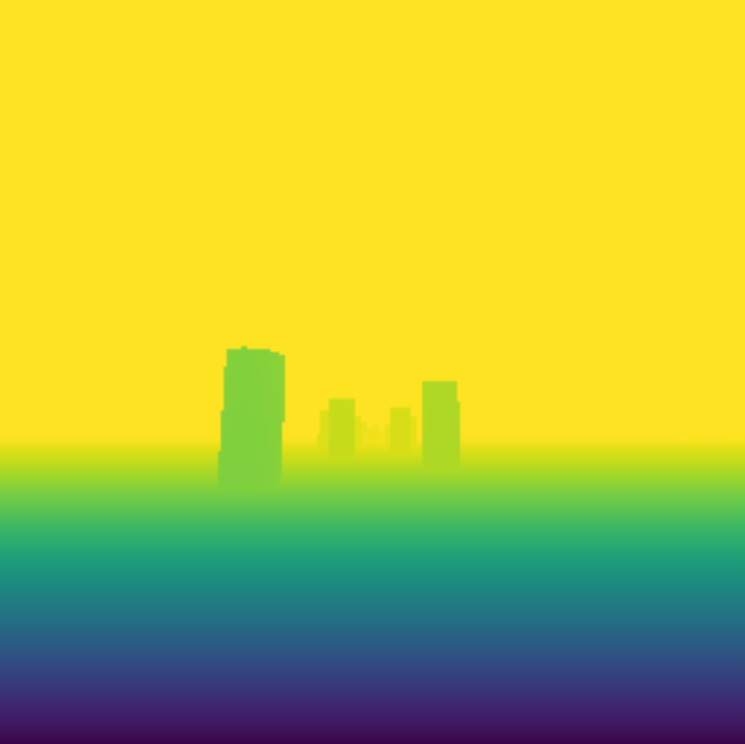

The planning-based approach follows a multi-stage pipeline:

- Receive depth images from the camera

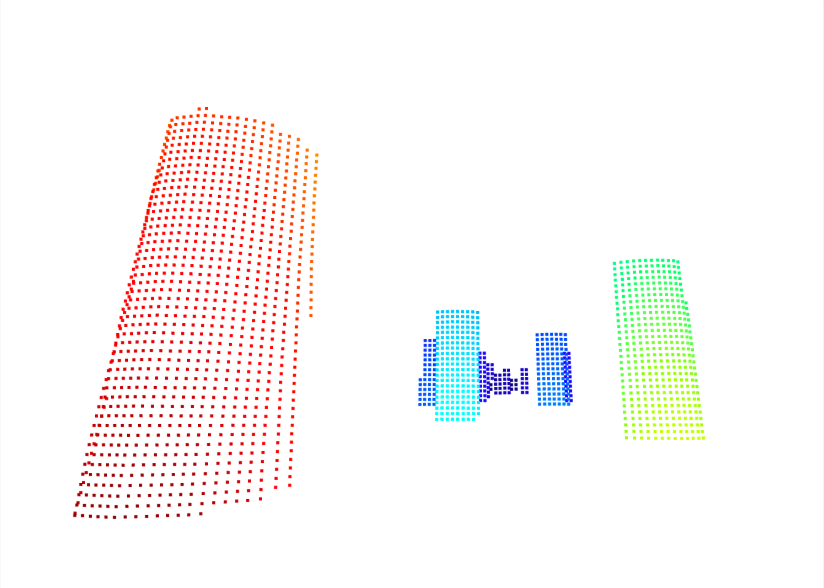

- Convert depth images into a 3D point cloud

- Project the point cloud into a 2D occupancy map

- Inflate obstacles in the map to maintain safe clearance

- Use A* to plan a collision-free path

A* path planning with inflated obstacles

A* path planning with inflated obstacles

Path Following

Once a path is generated:

- The robot follows it using a P controller

- The controller steers the robot toward successive waypoints

- Velocity is held constant during execution

This approach emphasizes global structure and explicit safety margins but depends heavily on map accuracy.

Method 2: Local Reactive Obstacle Avoidance

We also implemented a simpler, purely reactive method that does not rely on mapping or global planning.

Depth-Based Steering

- The depth image is split into left and right halves

- Depth values in each half are summed

- The robot steers toward the side with greater free space (larger depth sum)

This approach is lightweight, fast, and avoids errors introduced by imperfect mapping.

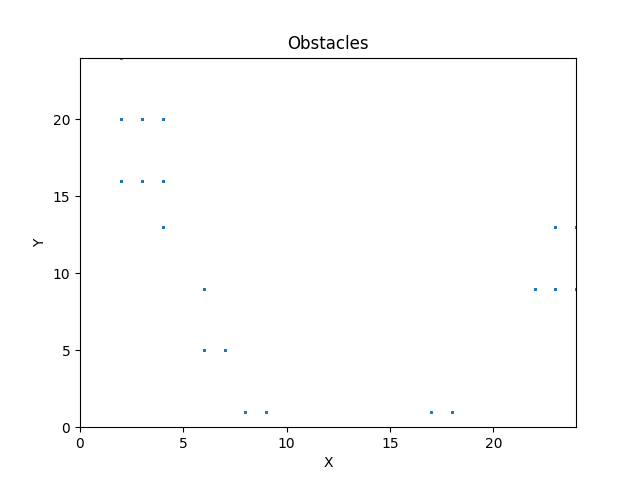

Optimal Target Selection

To improve steering behavior, we computed an optimal target direction by:

- Identifying gaps large enough for the robot to pass through

- Selecting the gap closest to the robot’s current trajectory

- Minimizing unnecessary lateral movement

Selection of optimal target gap for navigation

Selection of optimal target gap for navigation

Results & Evaluation

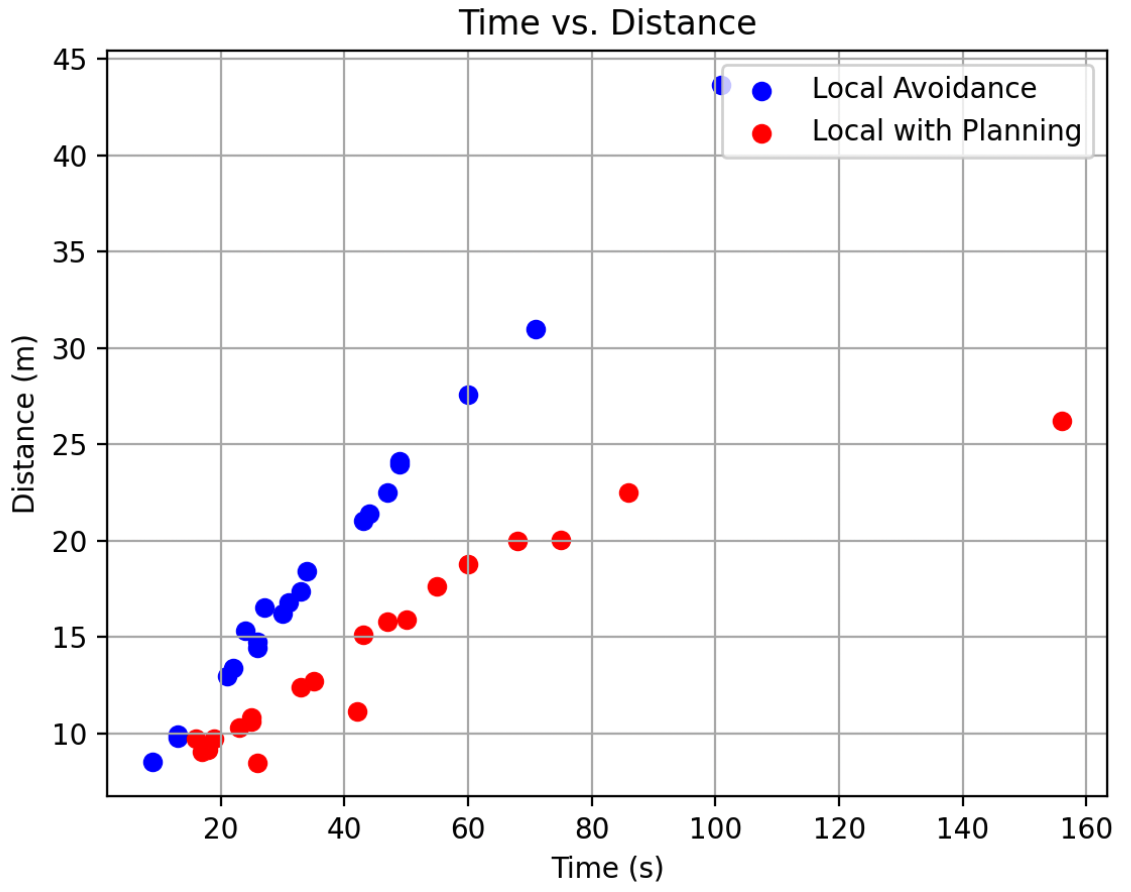

Each algorithm was tested over 20 independent trials.

Performance Metrics

Local Reactive Avoidance

- Average distance traveled without collision: 19.56 m

- Average speed: 0.52 m/s

Planning + Local Avoidance

- Average distance traveled without collision: 13.33 m

- Average speed: 0.29 m/s

Comparison of performance with and without planning

Comparison of performance with and without planning

Surprisingly, the local-only method outperformed the combined planning approach. We believe this is primarily due to map inaccuracies caused by PyBullet camera intrinsics, which made it difficult to construct a perfectly accurate local map. These inaccuracies occasionally caused collisions despite planned paths.

Discussion

This project highlights an important insight in robotics:

More complex pipelines do not always lead to better real-world performance.

While planning-based approaches provide structure and interpretability, they are sensitive to perception errors. In contrast, simple reactive methods can be more robust when sensing is noisy or imperfect.

Future Work

Potential directions for improvement include:

- Improved map accuracy through better camera calibration and higher-resolution maps

- Advanced path-following controllers, such as Pure Pursuit combined with PID control

- Adaptive velocity control that slows near obstacles and accelerates on clear paths

- Dynamic environments with moving obstacles of varying shapes and speeds

Demo

Full demonstration of obstacle avoidance behaviors